Beyz Coding Assistant vs ChatGPT for Coding Interview Prep (2026)

January 26, 2026

TL;DR

If you use ChatGPT for coding interview prep, you’ll often get solid explanations—but you still have to design the workflow yourself: what to ask, how to validate answers, and how to review. Beyz Coding Assistant is built around that missing workflow. It enforces structure (approach → edge cases → complexity), checks reasoning out loud, and closes each session with a reusable recap.

The real difference isn’t “smarter answers,” but fewer prep gaps: structured walkthroughs, thought validation, complexity prompts, and a review loop tied to IQB as the question-bank input layer. If you’re preparing for LeetCode-style rounds or system design interviews and keep feeling “I solved it, but I can’t explain it,” Beyz tends to fit better.

Introduction: why this comparison matters in 2026

In coding interviews, the hardest part is rarely typing. It’s narration—turning a messy first thought into a clean plan, then defending trade-offs (data structures, Big-O, edge cases) while someone watches you think.

Many candidates use ChatGPT during prep and hit the same wall. The answers are fine, but the session itself is unstructured. You bounce between “explain the solution,” “what’s the complexity,” “wait, what about duplicates,” and “help me talk through it,” without a consistent system. You may feel productive, yet still freeze when asked to explain your reasoning under time pressure.

That’s why comparing a general model like ChatGPT with a purpose-built AI coding interview assistant is useful. Beyz is designed to behave more like an interview partner: it nudges structure, validates your thinking, and makes sure you leave with a reusable recap—not just a one-off solution. IQB matters here as well: a question bank isn’t just content, it’s the input layer that determines whether your practice actually resembles real interviews.

If you want to anchor your prep in realistic prompts, start from the Technical & coding interview questions hub, then filter into a company page (for example, OpenAI interview questions) when you’re ready to focus your reps. For related context on how this fits into live rounds, see Beyz AI vs interviewing.io: live copilot vs anonymous mocks. For the “input layer,” the IQB interview question bank is where you can pull company- and role-shaped practice sets before you run timed sessions.

Quick overview

- Beyz Coding Assistant — Best for structured prep, explanation practice, and a closed review loop (IQB → solve → recap)

- ChatGPT — Best for quick concept refresh, brainstorming approaches, and “what if” variations (when you can self-validate rigorously)

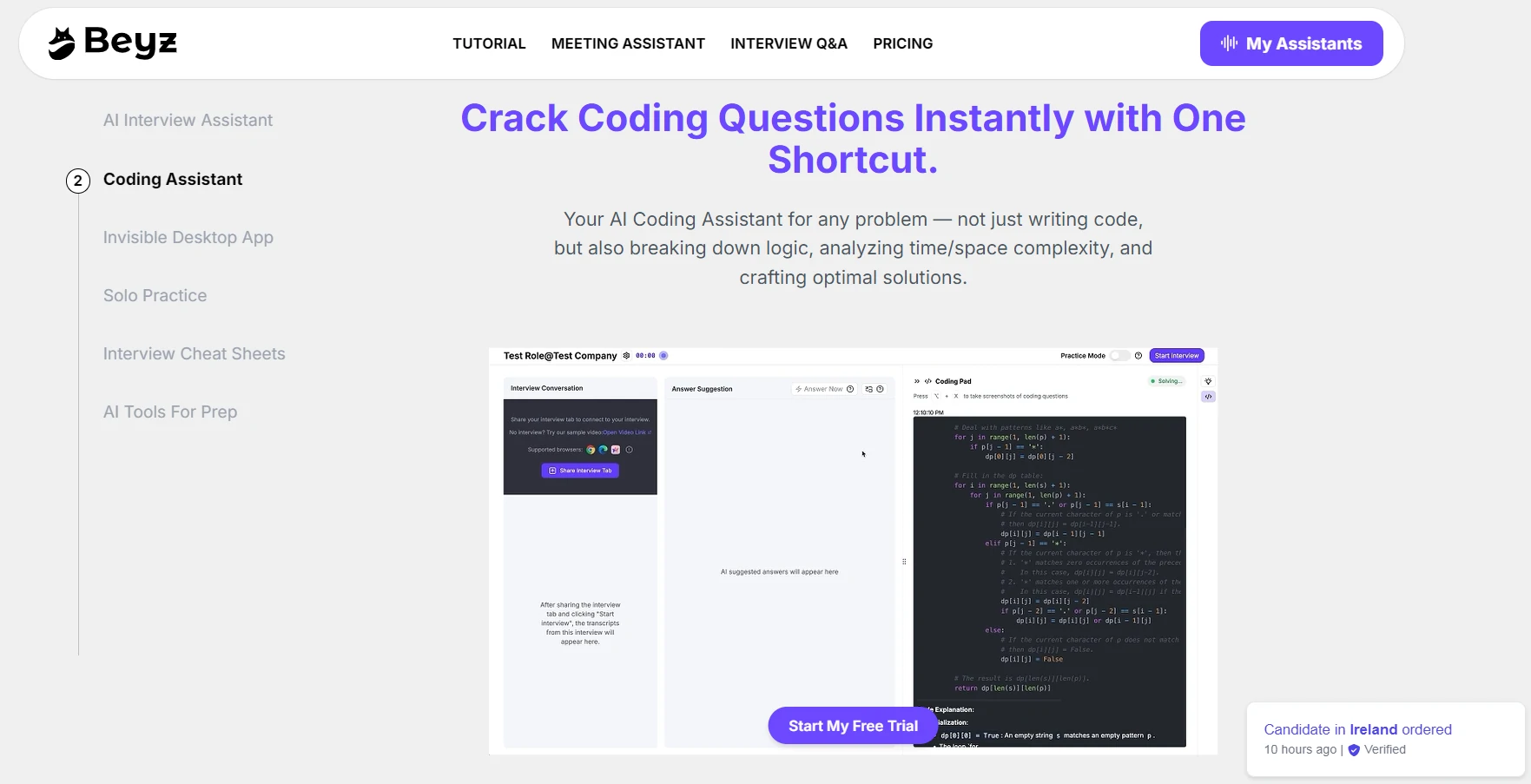

Beyz Coding Assistant

Beyz Coding Assistant is designed specifically for mock coding and system design interviews. Instead of focusing only on producing an answer, it trains the part interviews actually grade: reasoning, communication, and decision-making under pressure.

Why it feels different in practice

- On-screen question context keeps you in interview mode instead of copy-pasting prompts.

- Step-by-step reasoning scaffold forces a clean sequence: clarify → plan → validate → implement → test.

- Complexity prompts by default make Big-O analysis part of the solution, not an afterthought.

- Explain-as-you-code support helps you maintain a consistent narration (“why this DS,” “what invariant,” “what edge case”).

A small example

I used to solve medium problems and still feel embarrassed, because my explanation sounded like a screen recording of panic. With Beyz, the structure nudged me to say: “I’ll start with a hash map approach, confirm collision cases, then analyze complexity.” That one sentence alone turns chaos into signal—and that’s what interviewers listen for.

ChatGPT

ChatGPT is excellent for rapid learning and iteration. For coding interview prep, it shines at:

- explaining common patterns (two pointers, monotonic stacks, DP states),

- generating test cases,

- suggesting alternative approaches,

- helping debug pasted code.

The risk isn’t just occasional wrong answers. It’s workflow drift. Without strict constraints, you can leave a session feeling productive while skipping what interviews actually grade: constraints, invariants, edge cases, complexity, and explanation quality.

Where ChatGPT shines

- Fast concept refresh (“remind me how sliding window works”)

- Promptable depth (proofs, counterexamples, complexity)

- Variations (“what if input is streaming?”)

- Mock interviewer role-play—if you ask correctly

Why Beyz stands out for interview performance

If your goal is “learn DSA,” ChatGPT may be enough. If your goal is “perform in an interview,” the difference is the closed loop:

- IQB as the input layer: You practice company- and role-shaped questions, not random prompts, using the IQB interview question bank.

- Interview-shaped structure: Beyz pushes you to narrate clearly and justify decisions.

- Practice that feels like real rounds: timed sessions, explanation-first flow, and pressure simulation.

- Real-time copilot support: useful when your bottleneck is speaking clearly while thinking.

The result isn’t more questions solved—it’s fewer moments where you think, “I know this, but I can’t explain it.”

The 4-part prep loop that actually improves interview scores

Many candidates accidentally reinvent this loop with ChatGPT. Beyz simply makes it the default.

1 Input: choose questions like a database (IQB)

Treat your question bank like a query engine:

- Filter by company and role

- Mix expected patterns with unfamiliar variants

- Track “can solve” vs “can explain under pressure”

2 Solve: plan before you code

The talk track interviewers love is boring—because it works:

- Clarify constraints and edge cases

- State the approach and why

- Commit to time/space complexity

- Implement

- Walk through a small example

With ChatGPT, you must enforce this every time. Beyz does it by default.

3 Validate: thought validation beats “looks correct”

Most points are lost here:

- missing corner cases,

- incorrect complexity claims,

- solutions that pass samples but break invariants.

A good assistant asks, “What’s the invariant?” and “Where could this fail?” Beyz leans into that interviewer mindset.

4 Review: compress into reusable memory

Your goal isn’t 300 problems. It’s to compress each pattern into:

- when to use it,

- key invariant,

- complexity,

- common pitfalls,

- one clean explanation script.

Mini STAR-style story (technical)

Interview question: “How would you approach and explain a solution to a graph problem under time pressure?”

Situation: In a recent mock coding round, I was given a graph traversal problem and 30 minutes to solve and explain it live. I could get to a working solution, but my explanations tended to jump around, especially when asked about edge cases and complexity.

Task: I needed to present a clear, interview-ready approach—one that explained why the algorithm worked, not just that it worked, and that held up when the interviewer interrupted with follow-up questions.

Action: I started by stating the high-level approach (BFS over an adjacency list), clarified constraints and edge cases, and committed early to the time and space complexity. I practiced this flow using company-tagged questions from IQB, rehearsing the explanation out loud. For parts I felt shaky on, I used ChatGPT to revisit the core graph concepts, then returned to a structured mock session to practice explaining the solution cleanly from start to finish.

Result: I still solved the same number of problems, but my explanations became calmer and more linear. I stopped backtracking mid-sentence, handled follow-up questions more confidently, and could justify trade-offs without losing my train of thought.

Takeaway: Solving the problem proves correctness; explaining it clearly proves readiness. General AI helps you relearn concepts, but a structured interview workflow is what turns solutions into confident answers.

Decision matrix

| Prep Stage | ChatGPT is better when… | Beyz is better when… |

|---|---|---|

| Pattern learning | You need a fast concept refresh or multiple solution variations | You want structured practice after learning a pattern |

| Speed & accuracy | You want quick hints while stuck | You want timed mock sessions that mirror real interviews |

| Explanation | You can prompt it to role-play an interviewer | You want a default narration scaffold without extra prompting |

| Complexity | You remember to explicitly ask for Big-O analysis | You want complexity prompts built into every solution |

| Review loop | You manually ask for summaries and templates | You want recap-first habits with reusable notes |

So… which should you use in 2026?

Use ChatGPT if you’re early in prep, exploring patterns, and can self-check rigorously.

Use Beyz if you can solve but struggle to explain, want a consistent loop, and need interview-like structure by default.

The most effective setup for many candidates is simple:

- ChatGPT for learning and exploration

- Beyz for performance practice and review loops

That division mirrors how interviews are actually scored.

A practical way to start

If your prep currently feels scattered—random tabs, random prompts, and no clear sense of progress—start by simplifying the workflow rather than adding more tools.

Begin with a structured coding interview questions hub to anchor your practice around realistic prompts and recurring patterns, rather than isolated problems. Reviewing curated technical and system design questions (for example, in a centralized interview Q&A library like Beyz Interview Q&A Hub) helps you focus on what actually gets asked, not just what’s popular.

From there, use an interview question bank as an input layer—such as the Interview Question Bank—to filter questions by company, role, and topic. Practice fewer questions, but force yourself to explain the approach, complexity, and trade-offs out loud, then revisit the same set after review.

Tools like Beyz coding assistant are designed to support this loop during coding and system design practice, especially when your bottleneck isn’t solving problems but explaining them clearly under pressure. If you want to see how this workflow compares in practice, you can also reference related breakdowns like Beyz AI vs CoderPad.io:Prep Copilot vs Interview IDE.

If your prep has turned into random tabs and random prompts, try a single workflow:

- Choose realistic questions from a structured interview question bank

- Practice with a coding interview assistant that enforces explanation

- Review with short, reusable recaps instead of endless new questions

References

-

Indeed Career Guide — How to prepare for coding interviews step by step

https://www.indeed.com/career-advice/interviewing/how-to-prepare-for-coding-interview

-

LeetCode — Curated “Top Interview Questions” and pattern-based practice sets

-

GeeksforGeeks — Big-O notation and algorithm complexity explanations

https://www.geeksforgeeks.org/analysis-algorithms-big-o-analysis/

Frequently Asked Questions

How do I prepare for a coding interview effectively?

Focus on patterns plus explanation. For each question, force the same loop: clarify constraints, state an approach, name the invariant, commit to time/space complexity, then implement and walk through an example. The win isn’t doing 200 problems—it’s making your reasoning repeatable under pressure.

Is LeetCode enough to pass technical interviews?

Often necessary, not always sufficient. Many candidates fail on communication, edge cases, and trade-off reasoning (or system design/behavioral alignment), not raw problem solving. Use LeetCode for timed reps, but practice explaining decisions and handling follow-ups like a real interview.

Where can I find real interview questions by company?

Treat company-specific prompts as directional signals, not guarantees. The reliable approach is to combine a curated bank with company/role tags (to pick realistic sets), canonical pattern practice (to cover the fundamentals), and mock sessions (to pressure-test how you explain). Look for repeating themes and train those.

How do I practice explaining my code in an interview?

Speak before you code. Use a fixed talk track: approach → why it works → edge cases → complexity → walkthrough. Then redo the same problem later and practice explaining it faster and cleaner. The goal is to make your narration boringly consistent.

Are Beyz AI reliable for coding interview practice?

Yes—if you validate outputs and force structure. General tools can drift into unverified solutions unless you constrain them. A purpose-built coding interview assistant helps by defaulting to the interview sequence (plan, complexity, edge cases, recap) so you don’t skip what’s graded.

Related Links

- https://beyz.ai/blog/coding-interview-question-bank-beyz-ai-practice-2026

- https://beyz.ai/blog/ai-interview-assistants-2025-complete-practical-guide

- https://beyz.ai/blog/how-real-time-ai-interview-assistants-work

- https://beyz.ai/blog/24-hour-coding-interview-cram-plan-with-beyz

- https://beyz.ai/blog/coding-interview-question-bank-beyz-ai-practice-2026