Coding Interview Question Bank + Beyz AI Practice (2026)

January 26, 2026

TL;DR

An interview question bank saves time when it works like a database, not a “top 50” list—especially for a coding interview question bank where scope selection matters.

The real win is the loop: retrieve the right questions → timed attempt → review → redo.

For 2026 tech interviews, treat prep as three layers: coding + system design + behavioral. Pair a bank (IQB-style) with a practice surface (LeetCode) and a pressure test (Beyz mock interviews).

If you’re comparing options, you may also like: Best interview question bank tools and Coding interview question bank by company.

Why an Interview Question Bank Changes Prep Efficiency

Most tech candidates don’t fail because they “didn’t work hard.” They fail because their prep is scattered. A question bank changes the geometry of your time: instead of hunting for what to practice, you retrieve a focused set and spend the hours actually building recall.

A good bank supports a simple idea: coverage + retrieval + repetition. Coverage means you’re not overfitting to one topic (hello, endless sliding window). Retrieval means you can pull questions by role, round type, and difficulty without opening 20 tabs. Repetition is the unsexy part that actually sticks—redoing the same pattern until you can explain it cleanly under pressure.

Have you ever finished a “grind week” and still felt oddly unprepared?

Layered taxonomy matters here, because tech interviews aren’t one skill:

- Coding: pattern recognition + correctness under constraints + communication.

- System design: tradeoffs, bottlenecks, and “good enough” decisions with incomplete data.

- Behavioral: structured storytelling, self-awareness, and consistent signal across questions.

When your prep tool doesn’t separate those layers, you end up mixing incompatible goals. You might get faster at solving problems but worse at explaining. Or you might write beautiful STAR stories but blank when asked for edge cases.

The greatest question bank does not "give you questions"; rather, it provides a consistent method for retrieving the appropriate practice set and repeating it until it becomes muscle memory.

What Makes a Great Question Bank

Most lists fail for a boring reason: they’re not designed for decision-making. They’re designed for clicks. You get “100 Google questions,” but no tagging, no rubric, no hint of which role or round type they map to. It’s like being handed a grocery aisle and being told, “Cook dinner.”

Random lists also encourage a subtle form of procrastination. You feel productive because you’re reading. But you’re not building recall under time pressure, and you’re not tracking what you keep getting wrong.

So what should you look for in a question bank?

Mini checklist:

- Tags that match reality: role, company, topic, round type (coding vs system design vs behavioral).

- A retrieval workflow: filters/search that lets you build a set fast.

- A review rubric: what “good” looks like (not just “here’s an answer”).

- Redo support: an easy way to revisit questions you missed.

- Notes-friendly: exportable, linkable, or easy to track in Notion/GitHub.

If you’ve only got a few hours per week, what would you rather do: scroll for the “perfect list,” or run a tight practice loop with a smaller set?

IQB as a Database Asset

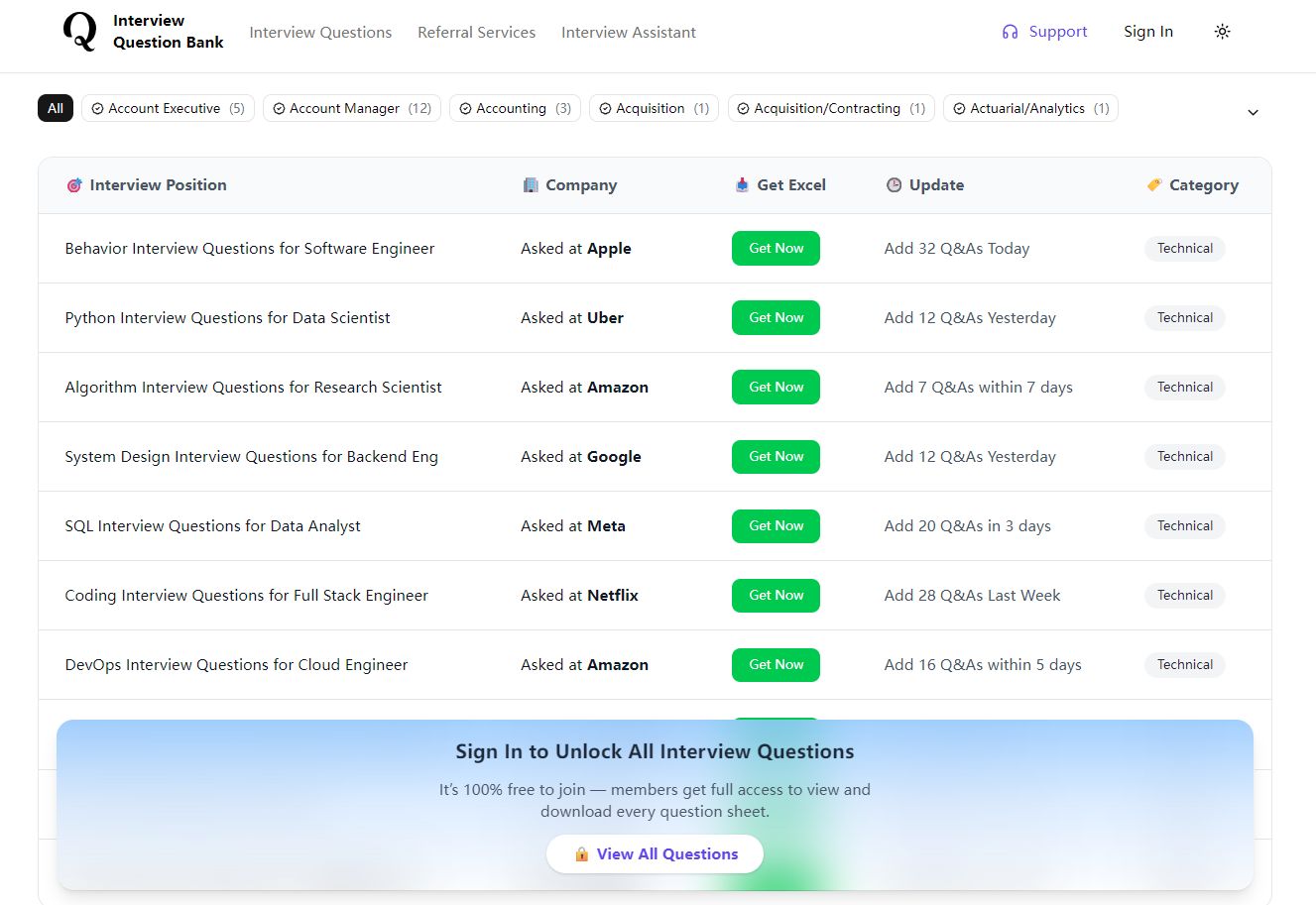

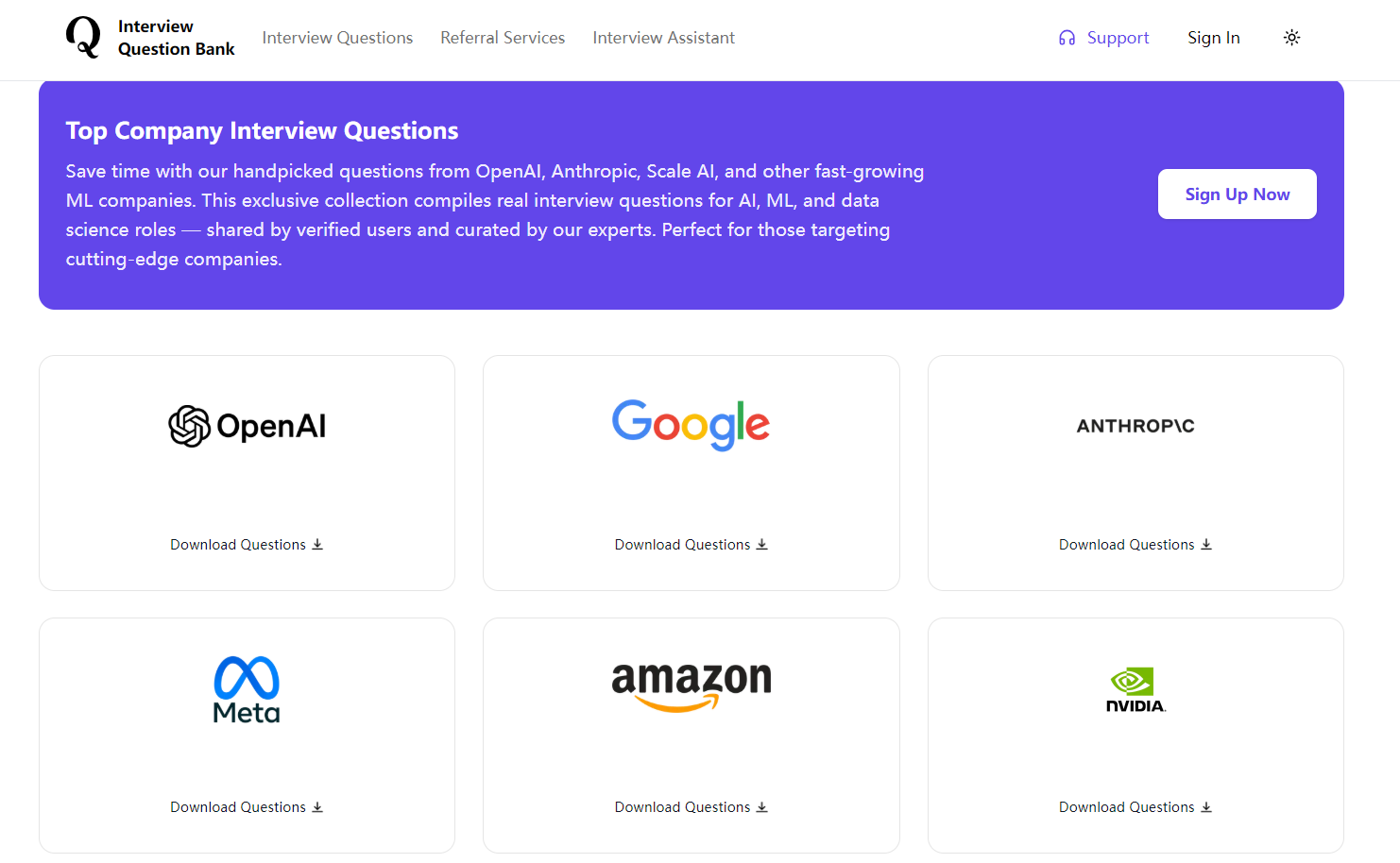

IQB (Interview Question Bank) is most useful when you treat it like a database asset—structured entries you can retrieve by company, role, and topic, then plug into a practice loop. If you want a broader hub for browsing and comparing prompts first, start from the Interview Questions & Answers hub.

A database-style bank is basically three things:

- Structured metadata (company, role, topic taxonomy, round type)

- Fast retrieval (filters that let you build a set in minutes)

- Repeatable reuse (the same set comes back when you need spaced redo)

That’s also where “company interview questions” become practical. You’re not trying to predict the exact prompt. You’re using company-specific tagging to steer your prep toward recurring themes—what shows up often enough to be worth rehearsing.

Do you want your prep to feel like collecting trivia… or like building a playbook?

Why “coding interview question bank” is a different beast than generic practice

A coding interview question bank earns its keep when it helps you pick problems that map to:

- the patterns the company tends to test,

- the difficulty band you’re likely to see for your level,

- and the communication style expected in the round (whiteboard-y, IDE, pair programming, etc.).

Practice platforms can give you volume. A bank gives you selection.

Company interview questions without the rumor mill

The healthiest stance: assume coverage varies by role and team, and avoid pretending any bank can guarantee what you’ll see. What it can do is help you stop wasting time on irrelevant prompts.

For example, if you’re prepping backend (3–8 YOE), you usually need a split: core coding patterns, a system design baseline, and behavioral stories with metrics. A database-style bank helps you retrieve that split without improvising every week.

Snippet-ready: The value of a database-style bank is not “more questions”—it’s fewer, better-chosen questions that you can retrieve and redo on schedule.

(And yes, you can keep tools open side-by-side: IQB for retrieval, a Notion page for notes, GitHub for snippets, and something like LeetCode for timed execution. Tools should feel like a stack, not a religion.)

Question Bank vs Practice Platform vs Mock Platform

| Tool type | Best for | Company specificity | Feedback loop | Weakness | Cost/access |

|---|---|---|---|---|---|

| IQB (question bank) | Fast retrieval + coverage planning across coding/system design/behavioral | High (tags/filters; coverage varies by role/company) | Depends on your notes + redo system | Not the execution surface by itself | Varies by plan/tool |

| LeetCode (practice platform) | Timed solving + pattern repetition for coding rounds | Medium (some company signals; not always role-specific) | Strong for solving; weaker for communication + reflection unless you add notes | Can turn into volume-chasing | Free + paid tiers |

| interviewing.io (mock platform) | Pressure-testing explanations with real-time feedback | Low–Medium (varies by interviewer/match) | Immediate human feedback on communication | Less control over scope; scheduling friction | Often paid |

Here’s the non-dogmatic way to pick: if you’re overwhelmed, start with the question bank to cut scope. If you’re rusty on execution speed, use LeetCode as the treadmill. If you keep “knowing the answer” but explaining it badly, mocks are the fastest reality check.

What are you missing right now—selection, execution, or communication?

A common combo that stays sane: retrieve 8–12 targeted questions from a bank, run timed attempts on a practice platform, then do one mock to test explanation quality and nerves.

If you’re using the bank for company interview questions, treat the company tag as a scope filter (themes + difficulty bands), not a promise that you’ll see the exact prompt.

If you want a more guided execution surface for coding rounds (especially when you’re practicing explanation, not just correctness), it can help to pair your bank with an interview-focused coding copilot like Beyz’s Coding Assistant.

A 48-Hour Google Coding Round Prep Story

This is a composite example based on common candidate patterns, not a claim about any one person’s real experience.

Friday evening, I had that specific kind of panic where your brain starts bargaining. “If I just do a few hard problems, maybe I’ll be fine.” The Google coding round was in 48 hours. I’d been busy at work, and my prep was… optimistic.

My first time block was Friday 8:30–11:30pm. I opened a question bank, filtered to the role level I thought matched, and grabbed a small set instead of spiraling into “top 100” lists. I picked 10 coding prompts that looked like they shared patterns (arrays/strings, trees, graph-ish BFS/DFS, and one DP that felt inevitable).

The first thing that went wrong: I misread constraints on problem #2 and built a solution that was fine in theory but blew up on time complexity. I only noticed because I forced myself to do a timed attempt and wrote down the complexity before touching code. It was annoying, but it was clean feedback: I wasn’t paying attention early enough.

The second thing that went wrong: I hit a classic debugging spiral. Problem #5 looked easy, so I rushed, got a wrong answer on an edge case, and then spent 20 minutes “fixing” symptoms instead of re-checking assumptions. I ended the night with that gross feeling of working hard and getting nowhere.

Saturday morning, second time block: 9:00am–1:00pm. I did something that felt almost too simple: I pulled up my notes and ran a redo loop. Same questions. Shorter timer. Focus on explanation and common failure points. Retrieve → timed attempt → review → redo.

The “aha” improvement was realizing my bottleneck wasn’t solving—it was starting. I was wasting mental energy choosing an approach from scratch every time. So I wrote a tiny “first 60 seconds” script in my notes: restate constraints, choose pattern candidate, name two edge cases, and only then code. The next redo felt calmer, like I had a rail to hold onto. I also kept a tiny behavioral “backup script” (metrics + tradeoffs + one clean STAR arc) in a cheat sheet so I didn’t ramble when I got nervous—something like Beyz’s Interview Cheat Sheets can be handy for that.

By Saturday afternoon, I wasn’t magically perfect. But I stopped feeling like the questions were random. They started feeling like variations.

If you had 48 hours, would you rather do 30 new questions… or 10 questions twice with real review?

How to Build Your Own AI Practice System

I’m biased toward systems that don’t collapse the moment you get busy. The best setup is the one you can run on a bad week.

Here’s what worked for me (and for a lot of people I’ve seen prep seriously): a repeatable loop you can run with any stack—IQB or another bank, Notion or a Markdown file, LeetCode or your own editor, and a mock platform when you need pressure.

A simple loop:

- Choose scope (one role + one company, or one topic band).

- Retrieve 8–12 questions from your bank (coding + a small behavioral set if needed).

- Timed attempt (short timer; write complexity and edge cases before coding).

- Rubric-based review (what failed: approach, implementation, explanation, or nerves?).

- Spaced redo (same set comes back 2–3 days later; add new questions only when your misses stabilize).

I keep the “review” brutally practical. One sentence for the mistake, one sentence for the fix, one sentence for the trigger (“I rush when it looks easy”). It’s not therapy, it’s pattern recognition.

Where does AI fit without turning your prep into copy-paste? I treat it like a coach for structure: it helps me keep a rubric, tighten explanations, and turn mistakes into redo prompts. If you want that kind of workflow built-in, Beyz’s Prep tools and Practice Mode can sit next to your usual stack (Notion, GitHub, LeetCode) without becoming the whole story.

Are you building a loop you can repeat… or a plan you can only follow when life is perfect?

The “AI practice system” is a loop: retrieve a tight set, practice under time, review with a rubric, and redo on schedule until you stop making the same mistakes.

If you want a database-style layer for picking focused practice sets, try the IQB interview question bank.

And if you want to rehearse with the same context you’ll use later, pair it with Beyz Practice Mode.

If you’re trying to stop “random prep” and start building recall, pick a narrow scope and run the loop for one week: retrieve 8–12 questions, do timed attempts, review with a rubric, then redo the same set until your first minute becomes automatic. The goal isn’t more questions—it’s fewer questions that come back on schedule.

References

- Indeed Career Advice — STAR response technique and how to structure behavioral answers.

- Harvard Business Review — STAR method framing and why structured stories outperform rambling answers.

- LeetCode Study Plan — “Top Interview 150” for structured, timed coding practice

Frequently Asked Questions

Are interview question banks worth it?

They’re worth it if the bank behaves like a database, not a blog list. A good bank helps you retrieve the right questions for your role and target company, practice under time pressure, and repeat the same patterns until they’re boring. The bad version is a rabbit hole: you do 40 random prompts and still freeze when the interviewer tweaks the wording. If you’re 3-8 YOE and juggling work, the real value is efficiency: fewer questions, better coverage, and a tighter review loop.

How many questions should I practice per week?

Most people do better with fewer questions and more repetition. Start with 8–12 questions in one narrow scope (one role + one topic area, or one company + one round type). Do timed attempts, then redo the same set later in the week after reviewing mistakes. If you can only spare 3–5 hours weekly, the goal isn’t volume—it’s building recall for patterns: common constraints, edge cases, and the way you explain tradeoffs. Add new questions only when your redo accuracy and explanation quality stop improving.

Where can I find company interview questions that aren’t just rumors?

Take company-specific queries as indications rather than promises. The most straightforward technique is triangulation: a structured question bank with company/role tags, targeted practice on canonical patterns (for coding), and a mock platform to pressure-test your communication. When you find repeating themes (for example, particular data structures or system architecture components), practice those rather than the specific prompt. Check for recency and role match: an SRE loop will not duplicate an Android loop. The aim is directionally precise preparation, not anticipating the specific question.

What’s the difference between IQB question bank and LeetCode?

IQB interview question bank is about retrieval and coverage: it organizes prompts by company, role, and topic so you can pick the right practice set quickly. LeetCode is primarily a practice platform: great for timed problem solving and pattern reps, but company specificity and behavioral/system design coverage vary based on what you use. Many people combine them: use the bank to choose what to practice, LeetCode to execute timed attempts, then a notes system (Notion/GitHub) to track mistakes and redo schedules.

Related Links

- https://beyz.ai/interview-questions-and-answers/openai

- https://beyz.ai/interview-questions-and-answers/goldman-sachs

- https://beyz.ai/interview-questions-and-answers/shopee

- https://beyz.ai/blog/beyz-vs-coderpad-prep-copilot-vs-interview-ide

- https://beyz.ai/blog/beyz-coding-assistant-vs-chatgpt-coding-interview-prep-2026